Today’s little snippet shows a variant of the usual cross product implementation in your average SSE vector library. In pseudo-code, we can express the cross product formula as

cross(a, b) = a.yzx * b.zxy - a.zxy * b.yzx;

This is reasonably straightforward to implement as an SSE2 function, named cross_4shuffles because of reasons that will become apparent soon:

__m128 cross_4shuffles(__m128 a, __m128 b)

{

__m128 a_yzx = _mm_shuffle_ps(a, a, _MM_SHUFFLE(3, 0, 2, 1));

__m128 a_zxy = _mm_shuffle_ps(a, a, _MM_SHUFFLE(3, 1, 0, 2));

__m128 b_zxy = _mm_shuffle_ps(b, b, _MM_SHUFFLE(3, 1, 0, 1));

__m128 b_yzx = _mm_shuffle_ps(b, b, _MM_SHUFFLE(3, 0, 2, 1));

return _mm_sub_ps(_mm_mul_ps(a_yzx, b_zxy), _mm_mul_ps(a_zxy, b_yzx));

}

At first glance, there doesn't seem to be any method for optimizing this operation, short of implementing it in hardware as a single operation.

Let's take a closer look at the operations we're performing though to see if there's something we can improve. Here's each line of the cross product operation individually:

cross(a, b).x = a.y * b.z - a.z * b.y;

cross(a, b).y = a.z * b.x - a.x * b.z;

cross(a, b).z = a.x * b.y - a.y * b.x;

Perhaps its possible to get rid of some of the swizzling. Since each line is independent of the others, we can freely rearrange them. Moving the third line on top yields this:

cross(a, b).z = a.x * b.y - a.y * b.x;

cross(a, b).x = a.y * b.z - a.z * b.y;

cross(a, b).y = a.z * b.x - a.x * b.z;

^ ^

Note the marked columns (^) - we got rid of two swizzle operations on our inputs a and b, at the cost of having our output reordered. Expressed in vector notation, we have:

cross(a, b).zxy = a * b.yzx - a.yzx * b;

Swizzling both sides with .yzx (the inverse of .zxy) gives us the cross product again:

cross(a, b) = (a * b.yzx - a.yzx * b).yzx;

Mathematically speaking, we've exploited the fact swizzling distributes over component-wise operations. Now let's code this as SSE2:

__m128 cross_3shuffles(__m128 a, __m128 b)

{

__m128 a_yzx = _mm_shuffle_ps(a, a, _MM_SHUFFLE(3, 0, 2, 1));

__m128 b_yzx = _mm_shuffle_ps(b, b, _MM_SHUFFLE(3, 0, 2, 1));

__m128 c = _mm_sub_ps(_mm_mul_ps(a, b_yzx), _mm_mul_ps(a_yzx, b));

return _mm_shuffle_ps(c, c, _MM_SHUFFLE(3, 0, 2, 1);

}

Nice - we got rid of one of the shuffles.

It seems several other people have come up with this variation independently, but it doesn't seem to be widely known or discussed at all. Some of the publically available SSE math libraries I looked at don't even have a single vec3 cross product as far as I can tell (libsimdpp, SSEPlus, Vc, Agner Fog's VCL), but of the ones that do have one (Eigen, DirectXMath) use the 4-shuffle version. I definitely remember seeing it somewhere on the internet after I came up with it myself, but can't seem to find it anymore. I'd be happy if anyone knowing where to find other references could point me there.

Now which of these two is the better one, speaking performance-wise?

Naively, one might expect that the alternative version is a bit faster, since it uses only 6 intrinsics (3 shuffles, 2 muls, 1 add) instead of 7 (4 shuffles, 2 muls, 1 add). Someone more familiar with how CPUs work will now remark that it's perhaps slower because it has a longer depency chain - the 4-shuffle version has a critical path of length 3 (shuffle -> mul -> add), while the 3-shuffle version has a critical path of length 4 (shuffle -> mul -> add -> shuffle).

It's not immediately clear how that translates into practical performance. Trying to profile a function as short as this one is not going to yield any sort of meaningful quantitative results without taking extreme care to control your profiling environment, such as executing in kernel mode to ensure a fixed CPU speed and prevent interrupts, as well as measuring and correcting for the overhead of the profiling method itself. Either way, in actual code your cross product function is going to be inlined and distributed among dozens of other instructions, so a result of "X is 12% faster" in one synthetic benchmark would not give us any useful insight on precisely why it's faster and how it's going to impact surrounding code.

Instead, I'm going to show how to theoretically analyse a piece of code. The tool I'll use for this is the Intel Architecture Code Analyser, or IACA, which simulates backend instruction latency and throughput for different Intel CPU architectures.

After compiling with MSVC and some manual optimization of the resulting assembly, I've run IACA in LATENCY mode for Haswell on both versions of the cross product. There is a second mode for determining THROUGHPUT, which is the number of cycles per iteration if the same code is processed in a tight loop, but your typical loop body is probably not going to consist of a single cross product.

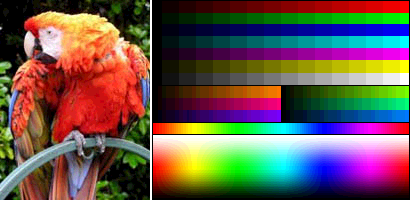

Here's the report for the 4-shuffle version:

Latency Analysis Report

---------------------------

Latency: 12 Cycles

The Resource delay is counted since all the sources of the instructions are ready

and until the needed resource becomes available

| Inst | Resource Delay In Cycles | |

| Num | 0 - DV | 1 | 2 - D | 3 - D | 4 | 5 | 6 | 7 | FE | |

-------------------------------------------------------------------------

| 0 | | | | | | | | | | | movaps xmm0, xmm4

| 1 | | | | | | | | | | | movaps xmm2, xmm3

| 2 | | | | | | | | | | | shufps xmm0, xmm4, 0xd2

| 3 | | | | | | 1 | | | | | shufps xmm2, xmm3, 0xc9

| 4 | | | | | | | | | | | mulps xmm2, xmm0

| 5 | | | | | | | | | 1 | | shufps xmm3, xmm3, 0xd2

| 6 | | | | | | 2 | | | 1 | CP | shufps xmm4, xmm4, 0xc9

| 7 | | | | | | | | | | CP | mulps xmm3, xmm4

| 8 | | | | | | | | | | CP | subps xmm3, xmm2

Resource Conflict on Critical Paths:

-----------------------------------------------------------------

| Port | 0 - DV | 1 | 2 - D | 3 - D | 4 | 5 | 6 | 7 |

-----------------------------------------------------------------

| Cycles | 0 0 | 0 | 0 0 | 0 0 | 0 | 2 | 0 | 0 |

-----------------------------------------------------------------

List Of Delays On Critical Paths

-------------------------------

5 --> 6 1 Cycles Delay On Port5

3 --> 6 1 Cycles Delay On Port5

The rows marked CP denote our critical path. As expected, this is the 3-instruction sequence of shuffle -> mul -> add. The colum FE marks instructions that have undergone macro-fusion, which means that they were combined with other instruction to form a sincle micro-op. I'm not sure what was supposed to be fused with what here, since macro-fusion only affects compare/conditional jump pairs as far as I'm aware; but it could simply be a misinterpretation by IACA.

Note the numbers in the column labelled 5. These numbers denote how many cycles an instruction was stalled because it had to wait for resources from a given execution port. In Haswell, the shuffle instruction is executed on port 5, so the shuffle at instruction 3 has to wait until the shuffle at instruction 2 is finished, while instruction 6, which is on our critical path, has to wait for instructions 3 and 5 to give up resources. This is different from stalls introduced by data dependencies - we've simply reached the limit of instruction-level parallelism here.

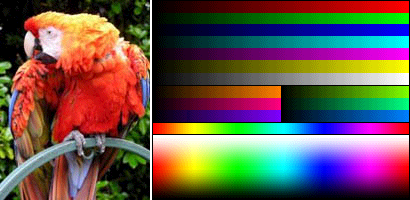

Here's the same analysis for the 3-shuffle version:

Latency Analysis Report

---------------------------

Latency: 11 Cycles

The Resource delay is counted since all the sources of the instructions are ready

and until the needed resource becomes available

| Inst | Resource Delay In Cycles | |

| Num | 0 - DV | 1 | 2 - D | 3 - D | 4 | 5 | 6 | 7 | FE | |

-------------------------------------------------------------------------

| 0 | | | | | | | | | | CP | movaps xmm0, xmm1

| 1 | | | | | | | | | | CP | shufps xmm0, xmm1, 0xc9

| 2 | | | | | | | | | | CP | mulps xmm0, xmm2

| 3 | | | | | | 1 | | | | CP | shufps xmm2, xmm2, 0xc9

| 4 | | | | | | | | | | CP | mulps xmm2, xmm1

| 5 | | | | | | | | | | CP | subps xmm2, xmm0

| 6 | | | | | | | | | | CP | shufps xmm2, xmm2, 0xc9

Resource Conflict on Critical Paths:

-----------------------------------------------------------------

| Port | 0 - DV | 1 | 2 - D | 3 - D | 4 | 5 | 6 | 7 |

-----------------------------------------------------------------

| Cycles | 0 0 | 0 | 0 0 | 0 0 | 0 | 1 | 0 | 0 |

-----------------------------------------------------------------

List Of Delays On Critical Paths

-------------------------------

1 --> 3 1 Cycles Delay On Port5

It may look like our critical path is suddenly 7 instructions long, but in reality we have two critical paths of the same latency: 0 -> 1 -> 2 -> 5 -> 6 and 3 -> 4 -> 5 -> 6. Even though the first chain is longer in terms of instructions, the second one is stalled by one cycle, so they amount to the same number of cycles. Other than that, our critical path is again as predicted.

Unsurprisingly, we have one stall on port 5 with the second shuffle, but since the third shuffle has a data dependency on the previous two, it doesn't have to compete for execution resources. So while our critical path in the 3-shuffle version could have been expected to be more expensive because of the longer dependency chain, the critical path in the 4-shuffle version introduces more latency due to backend resource contention. This difference becomes more pronounced when repeating the analysis for Nehalem, where shuffles have higher latency, and the 4-shuffle version is stalled for a whole 4 cycles on the critical path vs. 2 cycles of stalling on the 3-shuffle version.

This puts the 3-shuffle version slightly ahead when considered by itself. When interleaved with surrounding code it has the additional advantage of reduced register pressure (3 vs 4) and code size (24 vs 31 bytes for 32bit builds). The impact of these properties is difficult to quantify, but overall, the 3-shuffle version wins in my opinion.

Note however that this may change with in the future - with more backend resources for shuffling, the stall could be removed entirely, in which case the 4-shuffle version would win in terms of latency but still lose in terms of register pressure and code size.

The equivalent implementation on AltiVec (which is used on XBox 360 and PS3) has an extra nicety. In AltiVec, the permutation constant is supplied in a scalar CPU register instead of being baked into the instruction code. Because the 3-shuffle version uses the same permutation constants for all shuffles, we free up another CPU register compared to the 4-shuffle version.

Finally, another case where the 3-shuffle version is potentially useful is when implementing the cross product in two-wide SIMD. There, shuffles can be significantly more expensive due to having to move elements across different SIMD registers with multiple instructions, so reducing the number of shuffles can give a more pronounced advantage than with 4-wide SIMD.

TL;DR: Use cross_3shuffles.